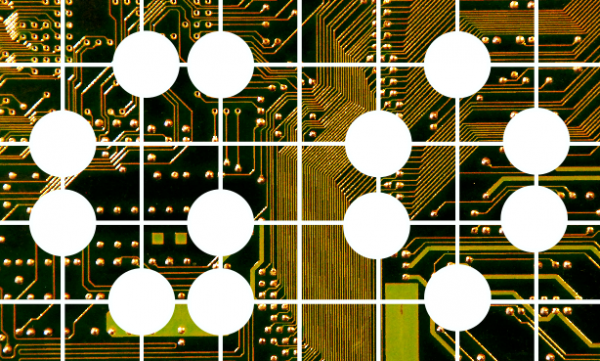

In a remarkable example of us being psychic (or, what’s also known as ‘a coincidence’), our recently posted introduction to the game of Go has been made more topical by actual Go-related news.

The game of Go has long been considered a difficult game for artificial intelligences to play – much more so than chess, which has plenty of computer players. A Wired article from 2014 describes Go as ‘the ancient game that computers still can’t win’. As well as having a much larger set of possible games ($10^{761}$, as opposed to $10^{120}$ in chess), Go also has highly complicated strategy, compared to its simple rules, and moves made early on in the game can result in important changes to the state of the board further down the line.

However, that doesn’t mean people haven’t been working on the problem, and Google has now announced that they’ve created an AI which can beat a high-ranking human Go player. They’ve used a combination of a tree search algorithm, and techniques involving neural networks – feeding the program large amounts of information about human players’ moves, then letting it play millions of virtual games to hone its skills. Using the Google Cloud Computing Platform for the huge amounts of processing power needed, they’ve managed to finally train a program so it’s good enough to beat a decent standard of human player.

The team responsible have published a paper in Nature (the PDF is available directly from Google though) which outlines their methods. The project, called AlphaGo, has an official page on Google Deepmind.

More information

AlphaGo, on Google Deepmind

AlphaGo: using machine learning to master the ancient game of Go, on the Google Official Blog

Mastering the game of Go with deep neural networks and tree search, in Nature