I remember when OCR of mathematics was such a difficult problem that there was no good solution. I remember hints some years ago that the then-current version of InftyReader could do a reasonable job of taking a PDF document and converting it into LaTeX code, but it was far from perfect.

Today my phone told me that the app Photomath has an update and now supports handwriting recognition. This means I can write something like this:

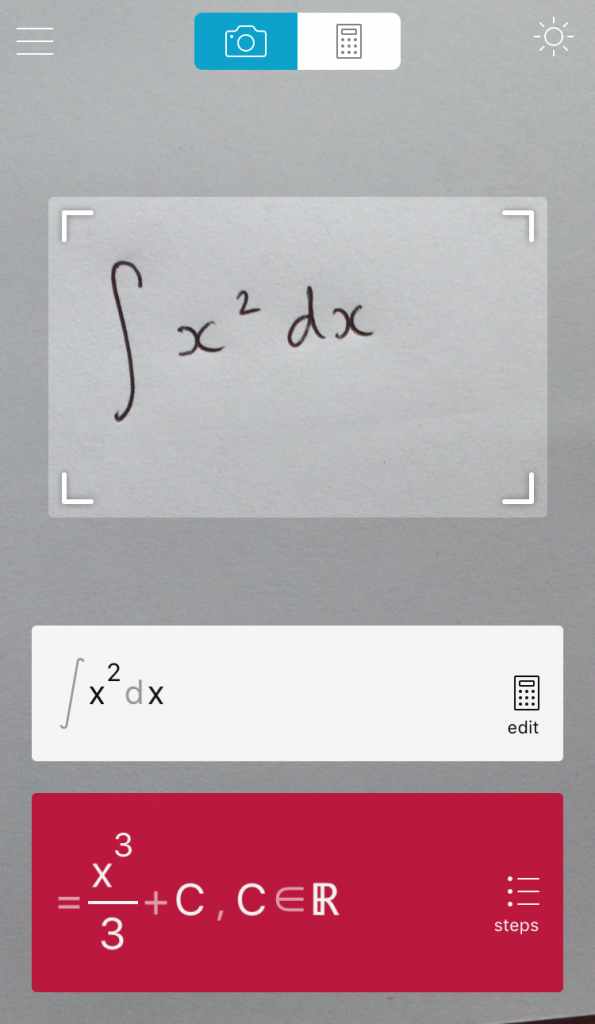

and Photomath does this with it:

Well. My immediate reaction is to be quite terrified. Clearly this is a fantastic technical achievement and a wonderful resource, but my thoughts go straight to assessment. I remember when I heard Wolfram Alpha was released, I was working to input questions a lecturer had written into an e-assessment system and realised that all the questions on the assessment I was inputting could be answered, with zero understanding, by typing them into Wolfram Alpha. Actually, not quite zero understanding, because at least you had to be able to reliably type the question. Now Photomath closes that gap (or will do soon – of course, it’s not yet perfect).

However, a lot of water has passed under the bridge since I was inputting questions into an e-assessment system. I’m a lecturer at Sheffield Hallam University now, where students who don’t arrive knowing about Wolfram Alpha are told about it, because students are encouraged to learn to use any technology available to them. Indeed, this year I was involved with marking a piece of coursework where engineering students were asked to show by hand how they had worked out their solutions and provide evidence that they checked their answer by an alternative method, usually by Wolfram Alpha screenshot.

It if often the case that lecturers use computers when setting assessments (beyond typesetting, I mean), even when they don’t expect students to use them in answering. I asked this question in a survey for my PhD and even about half of people who don’t use e-assessment with their students still use computers when setting questions (to check their answers are correct, perhaps). (Link to PhD thesis, see section 3.4.5 on p. 60.) Perhaps we should encourage our students to embrace technology in the same way.

In the academic year that is about to start, I am to teach on the first year modelling module. This is where our first year mathematics degree students get their teeth into some basic mathematical models, ahead of more advanced modelling modules in the second and final year. If you accept that a lot of mathematics is a process of: understand and formulate the problem, solve it, then translate and understand that solution – then this sort of technology only helps with the ‘solve it’ step. In the case of modelling, taking a real world situation, interpreting that as a mathematical model and extracting meaning from your solution are difficult tasks of understanding which these technologies do not help with, even as they help you get quickly and easily to a solution.

So, should I view Photomath as a terrifying assault on our ability to test students’ ability to apply mathematical techniques? Probably I should view it instead as a powerful tool to add to the mathematician’s toolkit, which hints at a world where handwritten mathematics can be solved or converted to nicely typeset documents, and so allow my students to gravitate from the tedious mechanics of the subject to greater ability to apply and show off their understanding. Probably.

Oh blimey pic.twitter.com/OdKS1MmY1N

— Peter Rowlett (@peterrowlett) September 4, 2016

If you’re really worried about your ability to test students – have you tried exams?

Indeed, and they have their place but are not without limitations.