Some thinking aloud about what’s happening on social media in my world, I hope you don’t mind.

Some thinking aloud about what’s happening on social media in my world, I hope you don’t mind.

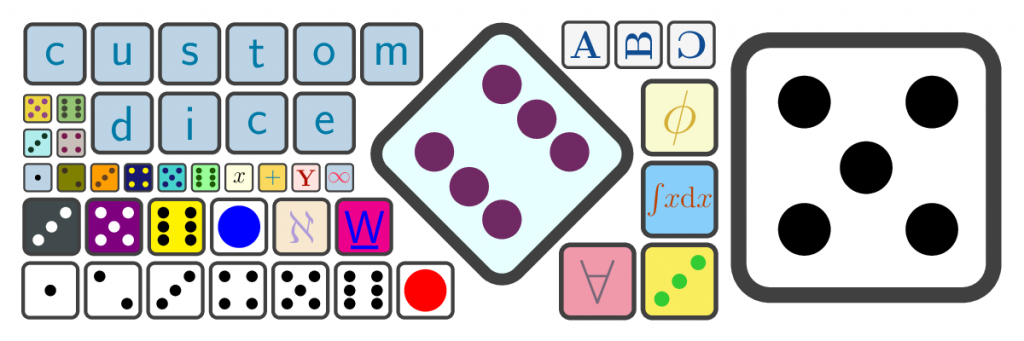

I made a new LaTeX package for drawing dice, customdice.

You may have seen DALL·E mini posts appearing on social media for a little while now – it’s been viral for a couple of weeks, according to Know Your Meme. It’s an artificial intelligence model for producing images, operating as an open-source project mimicking the DALL·E system from company OpenAI but trained on a smaller dataset. Actually, since I had a play with this yesterday it’s renamed itself at the request of OpenAI and is now called craiyon. Since the requests all take between 1-3 mins to generate, I’m not going to re-generate all the images in this post using craiyon so that’s why they have the old ‘DALL·E mini’ branding.

AI image generation is a massively impressive technical achievement, of course. craiyon doesn’t create as stunning images as DALL·E 2, but still it can create some ‘wow’s.

What’s interesting, sometimes, is how it interprets a prompt. The data craiyon is trained on is “unfiltered data from the Internet, limited to pictures with English descriptions” according to the project’s statement on bias, and this can lead to problems including that the images may “reinforce or exacerbate societal biases”.

To see that in action, we can take a look at how the model manifests cultural expression around mathematics. When I gave it the simple prompt ‘mathematics’, it produced this.

I notice in the news is an issue of whether we should have a different name for early maths. It’s actually quite interesting – and quite a problem – the different things we call ‘mathematics’.

Recently I came across an interesting idea about little mistakes in counting problems that actually don’t amount to much. In A Problem Squared 030, Matt Parker was investigating the question “What are the odds of having the same child twice?” and made some simplifying assumptions when thinking about DNA combinatorics. He justified leaving out a small number of things when counting an astronomical number of things by going through an example from the lottery.

The current UK lottery uses 59 balls and draws 6 of these, so the one in 45 million figure arises from \(\binom{59}{6}=45,\!057,\!474\), and the probability of winning is a tiny

\[ \frac{1}{45057474} = 0.00000002219387620 \text{.}\]

Matt posits the idea that somewhere along the way we forget to include some tickets.

But let’s say along the way while I’m working it out, for strange reasons I go ‘oh you know what, I’m going to ignore all the options which are all square numbers. You know, I just can’t be bothered including them. Yeah, they’re legitimate lottery tickets, but just to make the maths easier I’m going to ignore them’. And people are getting up in arms, and they’re like ‘you can’t ignore them, they’re real options’.

When teaching moved online due to COVID-19, we had to quickly work out how to deliver our modules online. The main options used to replace in-person classes were:

The first option is good for a module with lots of content delivery, such as when learning new mathematical techniques. In modules with some content delivery but a focus on interaction and discussion, such as mathematical modelling, the second is a good choice.

I felt neither was quite right for my second-year programming module. I opted instead for delivering notes and exercises which students could work through when convenient (which might be in a designed class time or might not) and used my time on the module to write responses to student queries and give feedback on programs written as formative work.

In class students tend to say they’ve done an exercise correctly and because you’re walking round a computer room it can be hard to examine their code in detail. Spending time looking at what they submit as ‘correct’ code in greater detail, it became clear that often there are subtle issues which can be usefully discussed in considered feedback.

Overall, I think this semi-asynchronous delivery was much better use of time and I was able to view more code and give better feedback than I would in-person.

I wrote about my experience delivering this module through the pandemic – the end of one academic year and the whole of the next – with Alex Corner in an open-access article which has just been published as ‘Flexible, student-centred remote learning for programming skills development‘.

This is part of a special issue of International Journal of Mathematical Education in Science and Technology – Takeaways from teaching through a global pandemic – practical examples of lasting value in tertiary mathematics education. There are loads of articles with useful reflections and good ideas that emerged from pandemic teaching.

If you are interested in pandemic literature in higher education teaching and learning, I’m aware of two other journal special issues you might like:

I wrote a mathematics-themed competition for British Science Week, which is a UK-wide event lasting ten days taking place this month.

The competition calls for individuals or groups to research the life and/or work of a mathematician and produce a poster to share their findings. The six mathematicians available to choose from are: