A while ago I made myself a calculator. I don’t know if anyone else uses it, but for the particular way I like doing calculations, it’s been really good. You’d think that if a calculator does anything, it should perform calculations correctly. But all calculators get things wrong sometimes! This is the story of how I made my calculator a bit more correct, using constructive real arithmetic.

One thing you need to think about when making a calculator is precision. How precise do the answers need to be? Is it OK to do rounding? If you do round, then it’s possible that errors accumulate as you compose operations.

I’ve always wanted to make a calculator that gives exactly correct answers. This isn’t strictly possible: there are more real numbers than a finite number of bits of memory can represent, or a digital display can show, no matter how you encode them. But I’m not going to use every real number, so I’ll be happy with just being correct on the numbers I’m likely to encounter.

The errors that a calculator makes depend on its model of arithmetic: how it stores numbers, and how it performs calculations on them.

No calculators use the same model of arithmetic that mathematicians claim to use when proving facts about numbers. In fact, I’m not sure that even mathematicians really use the “standard” model of arithmetic when doing arithmetic.

Let’s say I’m a calculator. You can be one too, if you like. I represent numbers as strings of digits: an integer part, and a fractional part, separated by a symbol. I’ll use a dot; you might use a comma or something else. I start with the most significant non-zero digit of the integer part, or just a 0 if the number is smaller than 1. I write digits of the fractional part until they start recurring, or until I get bored.

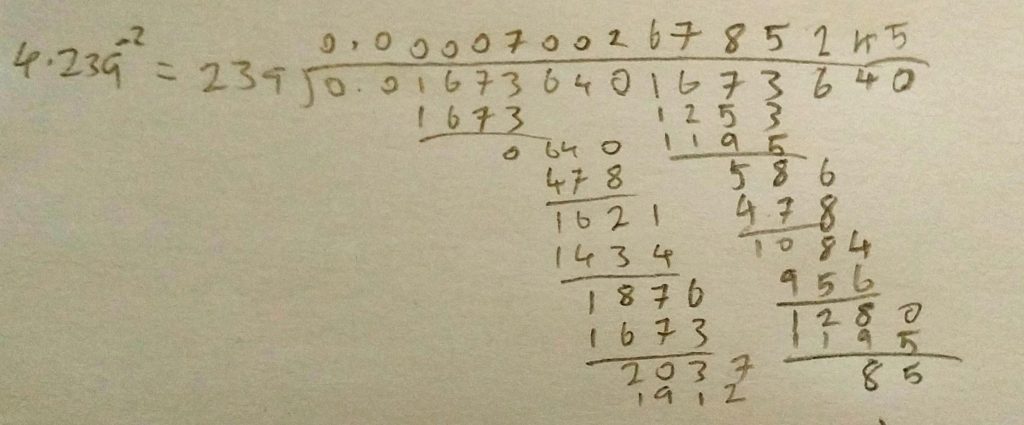

I know how to compute addition, subtraction, multiplication and division of numbers in this form. Calculations look something like this:

You might already know that numbers don’t have unique representations in this form: $1$ can also be written as $0.999\ldots$, which I’d say are both valid.

There are plenty of everyday numbers that I’ve never written in this form, such as $\sqrt{2}$ or $\pi$. More on π later.

Practically, I either round off to an approximation with a manageable number of digits, or switch to a different way of representing a number. I might maintain an air of authority by saying I’m writing things “algebraically”. But if you catch me at a moment when I don’t have all of my wits about me and ask how I write numbers, I’ll reply with a description of the above.

Some early computers represented numbers this way too, after a fashion. They’d use a collection of binary switches to represent a single digit, and collections of digits to represent numbers. Plenty of other ways of representing numbers have been tried. My favourite is the balanced ternary used by the Russian Сетунь (Setun) computer.

The most common way of representing real numbers on a computer now is to use floating point arithmetic. The gist of it is that you use some fixed number of digits to represent a number in the form

\[ m \times b^e \]

It’s like a binary version of scientific notation. There’s one binary bit for the sign – whether it’s positive or negative; then the other digits are shared between the mantissa $m$ and the exponent $e$, both integers. Separating out the exponent allows you to represent numbers to the same precision (measured in significant digits) at different orders of magnitude.

A calculator which stores numbers as 4-digit floating-point decimals might assign 3 digit for the mantissa, and 1 for the exponent. That would work like this:

| 2 | $200 \times 10^{-2}$ |

| 416 | $416 \times 10^{0}$ |

| 1024 | $102 \times 10^{1}$ |

| 0.0001234 | $123 \times 10^{-4}$ |

In the last two examples, we had to round off, introducing an error. For each floating-point number, there’s more than one real number that it could represent.

Floating point is far from perfect. In fact, pretty much anyone who uses it eventually encounters a result that seems counter-intuitive or obviously wrong, prompting even proponents of floating-point arithmetic like William Kahan to write essays with titles such as “How Java’s Floating-Point Hurts Everyone Everywhere“.

There are a surprising number of different ways floating point arithmetic can go wrong. Mike Sebastian maintains a page of “calculator forensics”, recording the result of computing $\arcsin (\arccos (\arctan (\tan (\cos (\sin (9) ) ) ) ) )$ on as many different models of calculator as he or his pals can get their hands on. The value should be exactly 9, but Mike has seen calculators produce results as small as 0 and as large as 71.252182, and all sorts of different values in-between.

Or, more succinctly, you might see this while using a floating-point calculator:

> 1/5 + 1/5 0.4 > 1/5 + 1/5 + 1/5 0.6000000000000001

This happens because $\frac{1}{5}$ doesn’t have a terminating representation in binary: the computer has to round off. Sometimes it can tell that this might have happened and gives you the shortest corresponding decimal number, but if you reuse several rounded-off numbers, the error might add up to something that is within the precision that the computer feels is safe, so it just shows you what it’s got. And what it’s got is not correct.

Or

> 2**300/(2**300-2000000) == 1 true

This happens because the computer only uses 50-ish bits for the mantissa, so even though I’ve subtracted two million from $2^{300}$, it doesn’t make a difference in the computer’s representation, so dividing one by the other gives exactly 1. The real value is a little bit more than 1.00000 00000 00000 00000 00000 00000 00000 00000 00000 00000 00000 00000 00000 00000 00000 00000 00009.

We just live with these errors and they accumulate constantly and yet on the whole things seem to work.

Thinking about this helps me when I worry about the universe.

Floating-point arithmetic is widely used because it’s efficient. But who cares about efficiency? Not me, I want the right answer!

The efficiency of floats comes from using a fixed number of bits to represent a number, so any operation always takes the same amount of time. So we might find correctness by dropping this constraint and using more bits for some numbers than others.

I’m going to try doing that now. You can try too. I will write down $1 \div 3$ in decimal.

\[ 0.3333333333 \ldots \]

Bear with me, this will take a while.

We have a problem: if some numbers need more digits than others, then some numbers will take forever to write. That means we don’t have enough memory to store the whole result, and if we want to do anything else with it then we’d have to first wait forever.

There’s a joke about a finitist mathematician – someone who believes there aren’t infinitely many numbers:

“So there’s a biggest number, eh?”

“Yep”

“Do you believe 1 exists?”

“Yes”

“Do you believe 10 exists?”

“… Yes.”

“Do you believe 100 exists?”

“……… Yes.”

etc.

This joke might originate with Alexander Esenin-Volpin.

I suppose the implication is that eventually the delay before the finitist answers gets so long that the questioner gets bored and wanders off. As a parent of a four-year-old, all I have to say is that numbers might not be infinite, but the mathematician’s patience would have to be!

Matt has made a video of the attempt. Here’s a clip of me shortly after I realised the right way to do it

Recently I took part in Matt Parker’s attempt to calculate π by hand. He wanted to repeat local hero William Shanks’s calculation using Machin’s formula,

\[ \frac{\pi}{4} = 4\arctan\left(\frac{1}{5}\right) – \arctan\left(\frac{1}{239}\right) \]

We, and Shanks, performed the calculation by rewriting the above formula using the Taylor series expansion for $\arctan$, to get

\[ \pi = 4 \sum_{n=0}^{\infty} \frac{(-1)^n)}{2n+1} \left( 4 \left(\frac{1}{5}\right)^{2n+1} – \left(\frac{1}{239}\right)^{2n+1} \right) \]

Then it’s just repeatedly multiplying, dividing, adding and subtracting numbers: all things we know how to do on paper.

Each term is smaller than the last, so in order to have an answer that was definitely correct to $n$ decimal places, we only had to compute until we got to a term with $n$ zeros. We initially planned on computing everything to a fixed 100-ish digits, so that we’d have 100 definite digits of π by the end.

After a while, we noticed that since each term is computed using the previous term, if we had 10 decimal places of $\left(\frac{1}{239}\right)^n$, we could only compute 10 decimal places of $\left(\frac{1}{239}\right)^{n+1}$. But if we later get more digits of the first term, we can pick up the calculation of the next term where we left off, and compute more digits of that too.

So we could first compute 10 digits of π, then go back to the start and compute 10 more digits of each of the terms, to get a few more digits of π. We’d slowly build up the real value of π over time, just like William Shanks did.

A bell started ringing in my head. I faintly remembered reading a while ago that the Android operating system’s built-in calculator lets you swipe right to see as many digits of the result as you’d like. A search for “calculator” in my Interesting Esoterica collection produced the paper Small-data computing: correct calculator arithmetic by Hans-J. Boem, about how they achieved this.

The representation is called constructive reals. It’s a really simple idea: rather than completing an entire calculation immediately, the calculator produces functions which can produce an approximation of the real value to any given number of digits. You store the construction, and only do real calculations with digits when you have to. When you do an operation like adding two numbers together, it stores those two functions and returns another one. If you want $n$ digits of $x+y$, it computes $n+2$ digits of $x$ and $y$, then adds those together and returns the first $n$ digits.

This is the same thing we noticed when computing π: if you want more digits later, you can just come back and compute more digits of all the bits you need. Like the finitist mathematician in the joke, this swaps precision now for time later on.

This works really well for a calculator interface: it can show you the first few digits, and only compute more if you ask for them. It wouldn’t work as well for a long-winded, automatic process, where you have to decide beforehand how much precision you want in the final result, so you might as well use that precision throughout.

Android, or at least this bit of it, is open-source software, so I thought it would be a nice project to translate Boehm’s Java code to JavaScript, to use in my calculator. It’s surprisingly short: less than 2000 lines in the end, to implement all the arithmetic functions, exponentials and logarithms, and trigonometric functions.

The exponential and trigonometric functions use exactly the same trick as Shanks did, almost 200 years ago: they compute terms of the Taylor series, and stop when they’ve got enough digits. In fact, the first version of the code used Machin’s formula exactly!

Translating the code took a day or two, and it worked immediately. This never happens! Thanks, Hans-J. Boehm!

I had to make a few decisions about how to present very long numbers. The Android calculator initially shows as many of the most-significant digits as will fit on the screen, then when you swipe to see more it appends an exponential like “Ennn” to show the number of digits that are now hidden to the left.

I don’t quite have it in me to make such a slick transition, so I went with something easier to achieve: the initial view shows at most 10 characters – either the whole number if it’ll fit, or scientific notation if not. When you tap the number, it expands to show all the digits in a box that can scroll, computing more when needed. I found it hard to spot the decimal separator while scrolling, so it draws the digits of the fractional part in italics.

You can use my calculator yourself, or if you’ve got an Android device you can enjoy the same level of accuracy by opening the built-in Calculator app.

My JavaScript translation of the constructive reals library is on GitHub.

Excellent article .. it motivated me to download Boehm’s Java code. After modifying the rpn_calc code for input (I have a “rusty” “Ready for Java”) it worked fine! I tried MathWorld’s Near Integer example (62) which is 10^-269 close to an integer. I tried to demonstrate, using timing, the general principle that the implementation only calculates digits when needed, ie on output; and that caching speeds things up considerably because previous digits have already been calculated and saved. So, I timed PI to 50000 digits [CR pi=CR.PI; String s=pi.toString(50000)] and after re-starting Java I got 17.2 sec. I similarly also timed 70000 digits and after re-starting Java got 35.2 sec. BUT, when doing both [CR pi=CR.PI; String s=pi.toString(50000); s=pi.toString(70000)] and after re-starting Java I got 57.1 sec. That is about the same as “no caching”. Java timing is not so accurate – with Just In Time compilation, and garbage collection – so maybe explains the apparent lack of speedup. Or maybe the user has to enable “caching”. Any suggestions? Regards, Lewis Baxter.

Nice article! I’m reminded of a quote by Leonid Levin: “Only a nerd would call 2^500 a finite number.”

I once saw this hilariously mis-typed as “Only a nerd would call 2500 a finite number.”

I am particularly happy to see people thinking about precision. That is one of the point most people missed when I published https://news.ycombinator.com/item?id=30588089. They focused on the GUI part.

You approach is very interesting, I will try to see if I can use it also.

Found the same code, adapted it to Kotlin. Because yeah, although Kotlin started out as a JVM language, it can now target native and WASM, and I was planning to make my calculator cross platform eventually. The amount of missing stuff is _painful_, though.

I’m currently trying to figure out how to implement the 2-argument version of arctan. The tricky thing is, there are many special cases to handle if x=0 or y=0, but CR.signum() explodes if the number is 0!

Anyway that isn’t what I came here to mention. It seems that the latest versions of Android have removed that nice calculator, and substituted it with a much less interesting one. I have gone digging for the source of the current one, but I haven’t seen it do that nice precision scrolling thing even once.